Computer Science Team Presents Research on the ‘Security of Deep Learning based Automated Lane Centering under Physical-World Attack’ at USENIX Security Symposium

A team of researchers from UC Irvine’s Donald Bren School of Information and Computer Sciences (ICS) presented a paper titled “Dirty Road Can Attack: Security of Deep Learning based Automated Lane Centering under Physical-World Attack” at the 30th USENIX Security Symposium Aug. 13, 2021.

Meet the Research Team/Authors:

- Takami Sato, Computer Science Ph.D. student, UC Irvine

- Junjie Shen, Computer Science Ph.D. student, UC Irvine

- Ningfei Wang, Computer Science Ph.D. student, UC Irvine

- Yunhan Jack Jia, Research Scientist, ByteDance Ltd.

- Xue Lin, Assistant Professor of Electrical and Computer Engineering, Northeastern University

- Qi Alfred Chen, , Assistant Professor of Computer Science, UC Irvine

Research Overview

Automated Lane Centering (ALC) systems are widely available in today’s vehicles such as Tesla, GM Cadillac, Honda Accord, Toyota RAV4 and Volvo XC90. While ALC is very convenient for human drivers, it is high security and safety critical. Recently, Deep Neural Network (DNN)-based lane detection is commonly used for ALC even though the vulnerability of DNN models to adversarial attacks has been widely reported. Recognizing this, a team of ICS researchers conducted the first security analysis of state-of-the-art DNN-based ALC systems in their designed operational domains (i.e., road with lane lines) under physical-world adversarial attacks.

Significance of Research

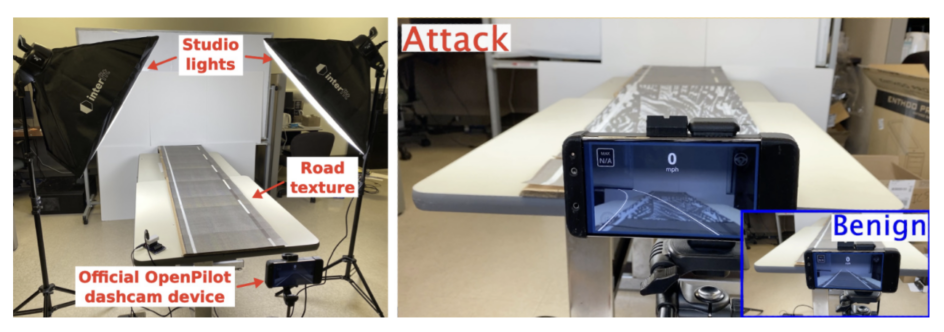

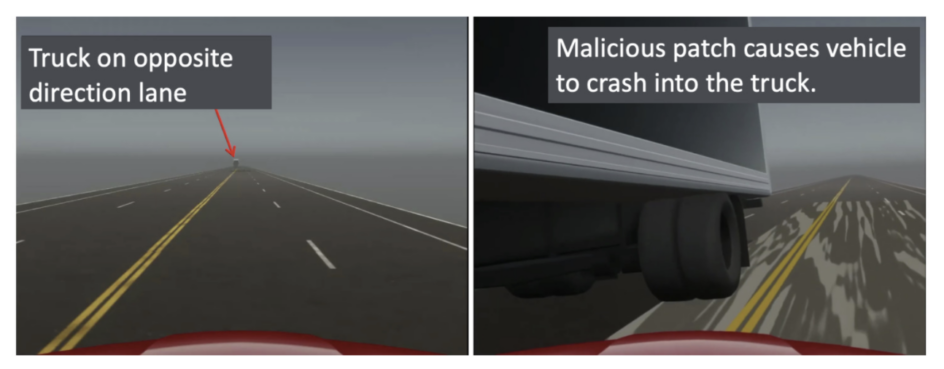

The study demonstrates that the DNN-model level vulnerability can lead to the whole ALC system-level attack effect. The research team designed a Dirty Road Patch (DRP) attack – a road patch with carefully designed dirty patterns (i.e. adversarial perturbation) can mislead a production ALC system, OpenPilot, and causes it to deviate from its driving lane within the average driver’s reaction time (2.5 seconds). They then evaluated DRP attacks with real-world driving traces, a physical-world miniature-scale setup, and a production-grade simulator. The team also evaluated the safety impact on a real vehicle by injecting attack traces.

Broader Impact of Research

Considering the popularity of ALC and the safety impacts shown in the work, the hope is that the findings and insights can bring community attention and inspire follow-up research. The research team informed 13 companies developing ALC systems; 10 companies have replied and have started investigations. The team also started a follow-up project to design a defense method against DRP attacks.

Research Highlights

- First to systematically study security of DNN-based ALC in designed operational domains under physical-world adversarial attacks.

- Evaluated the attack on a production ALC with real-world driving traces, physical-world miniature-scale setup, a production-grade simulator, and also stealthiness, deployability, and robustness to different viewing angles and lighting conditions.

- Evaluated the safety impact on a real vehicle by injecting attack traces (10/10 crash rates with dummy obstacles).

- Evaluated five DNN model-level defenses, discussed sensor/data fusion-based defenses, and proposed short-term mitigation suggestions.

- Informed 13 companies developing ALC systems; 10 companies (77%) have replied and have started investigations.

Miniature-scale setup and results:

Simulator experiment:

Safety impact evaluation on a real vehicle by injecting an attack trace:

Visit the project website for more information, including a summary, demos and FAQs about the research project.