Culture of Collaboration Leads to Cutting-Edge Work in AI

Recently, UCI announced that researchers had developed a hybrid human-machine framework for AI systems that can complement human expertise:

From chatbots that answer tax questions to algorithms that drive autonomous vehicles and dish out medical diagnoses, artificial intelligence undergirds many aspects of daily life. Creating smarter, more accurate systems requires a hybrid human-machine approach, according to researchers at the University of California, Irvine. In a study published this month in Proceedings of the National Academy of Sciences, they present a new mathematical model that can improve performance by combining human and algorithmic predictions and confidence scores.

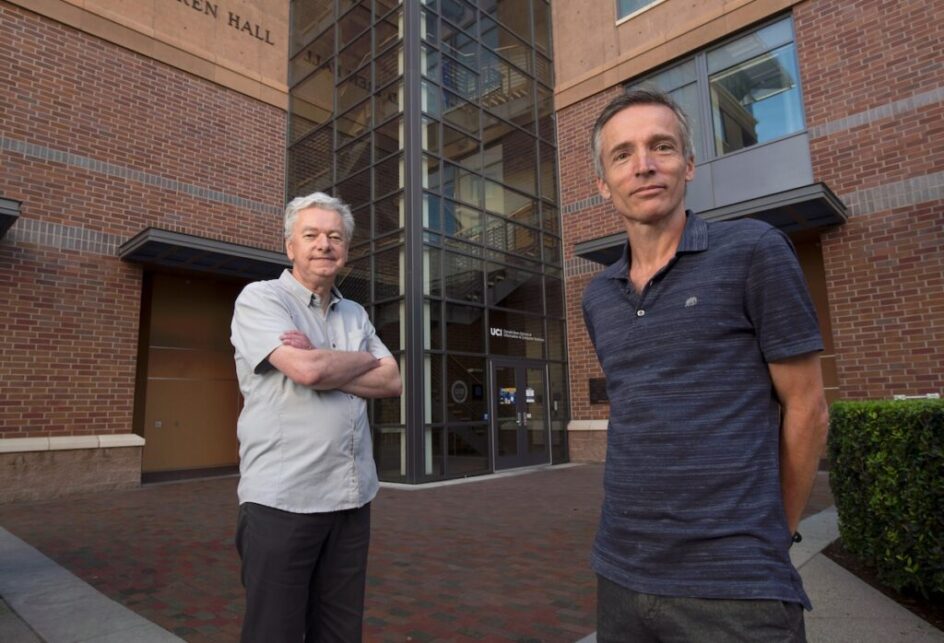

The seeds for this cutting-edge work by researchers in the Donald Bren School of Information and Computer Sciences (ICS) and the School of Social Sciences were first planted decades ago. This collaboration started with Padhraic Smyth, Chancellor’s Professor in the Computer Science and Statistics Departments, and Mark Steyvers, a professor in the Department of Cognitive Sciences.

“One of the really nice things about UCI is that there’s a lot of interdisciplinary activity,” says Smyth, whose research is supported through a Qualcomm Faculty Award. “There is this culture of collaboration, so cognitive scientists come to Bren Hall to attend our AI and machine learning talks, and we go to their talks.” In fact, Smyth and Steyvers have been meeting periodically since the early 2000s. “We’d chat over coffee about our projects,” says Smyth. “We speak different languages, but we’re both interested in intelligence — just from different perspectives. Computer science emphasizes artificial, and cognitive sciences emphasizes natural, and there’s a lot of overlap.”

So when the National Science Foundation (NSF) put out a call for research related to how humans and AI work together, Smyth and Steyvers put together two proposals, both of which were funded in 2019. One grant focuses on hybrid human algorithm predictions, exploring how to balance effort, accuracy and perceived autonomy; the other grant is an assessment of machine learning algorithms in the wild. This work led to the recently published PNAS paper, “Bayesian Modeling of Human-AI Complementarity,” as announced by UCI. The paper was co-authored by Steyvers and Smyth, along with Heliodoro Tejeda, a graduate student in cognitive sciences, and Gavin Kerrigan, a Ph.D. student in computer science funded by the HPI Research Center at UCI.

The grants have led to other papers co-authored by Steyvers and Smyth as well. They worked with Kerrigan on “Combining Human Predictions with Model Probabilities via Confusion Matrices and Calibration,” a paper appearing in proceedings for the Thirty-fifth Conference on Neural Information Processing Systems (NeurIPS 2021), and they worked with computer science Ph.D. students Disi Ji and Robby Logan on “Active Bayesian Assessment of Blackbox Classifiers,” a paper appearing in proceedings for the Thirty-fifth AAAI Conference on Artificial Intelligence. “This line of work is part of a larger collaboration with about five or six Ph.D. students,” says Smyth.

“One of the really nice things about UCI is that there’s a lot of interdisciplinary activity,” says Smyth, whose research is supported through a Qualcomm Faculty Award. “There is this culture of collaboration, so cognitive scientists come to Bren Hall to attend our AI and machine learning talks, and we go to their talks.” In fact, Smyth and Steyvers have been meeting periodically since the early 2000s. “We’d chat over coffee about our projects,” says Smyth. “We speak different languages, but we’re both interested in intelligence — just from different perspectives. Computer science emphasizes artificial, and cognitive sciences emphasizes natural, and there’s a lot of overlap.”

So when the National Science Foundation (NSF) put out a call for research related to how humans and AI work together, Smyth and Steyvers put together two proposals, both of which were funded in 2019. One grant focuses on hybrid human algorithm predictions, exploring how to balance effort, accuracy and perceived autonomy; the other grant is an assessment of machine learning algorithms in the wild. This work led to the recently published PNAS paper, “Bayesian Modeling of Human-AI Complementarity,” as announced by UCI. The paper was co-authored by Steyvers and Smyth, along with Heliodoro Tejeda, a graduate student in cognitive sciences, and Gavin Kerrigan, a Ph.D. student in computer science funded by the HPI Research Center at UCI.

The grants have led to other papers co-authored by Steyvers and Smyth as well. They worked with Kerrigan on “Combining Human Predictions with Model Probabilities via Confusion Matrices and Calibration,” a paper appearing in proceedings for the Thirty-fifth Conference on Neural Information Processing Systems (NeurIPS 2021), and they worked with computer science Ph.D. students Disi Ji and Robby Logan on “Active Bayesian Assessment of Blackbox Classifiers,” a paper appearing in proceedings for the Thirty-fifth AAAI Conference on Artificial Intelligence. “This line of work is part of a larger collaboration with about five or six Ph.D. students,” says Smyth.

One of those students is Markelle Kelly, a computer science graduate student who last summer received a fellowship through UCI’s Steckler Center for Responsible, Ethical, and Accessible Technology (CREATE). This year, Kelly was awarded a fellowship through the Irvine Initiative in AI, Law, and Society. Both the center and initiative are helping facilitate this interdisciplinary work.

“The human-machine collaboration between the cognitive sciences and computer science departments explores questions about how people and machines can work together as a team,” says Kelly, who is currently investigating how humans and AI can understand the strengths and weaknesses of each other to better delegate tasks. “By working alongside cognitive science researchers, I can better understand theories about the human side of this problem, and incorporate these ideas into potential solutions. The resulting cross-disciplinary experiments and findings are founded on a much more nuanced perspective than if only machine learning experts were involved.”

Smyth agrees. “Computer science historically has been a little bit averse to getting humans in the loop, but I think AI and machine learning researchers now realize that, although our algorithms have been developed and tested in our labs for a decade or two without attracting much attention, they are now being widely applied to address real problems involving real people,” he says. “As a consequence, getting students, early in their career, involved in these kinds of interesting projects and in discussions related to how AI is being used will help ensure AI is used more responsibly.”

— Shani Murray